4.7 KiB

2024/08/02

| Animals Singing Dance Monkey 🎤 |

🎉 We are excited to announce the release of a new version featuring animals mode, along with several other updates. Special thanks to the dedicated efforts of the LivePortrait team. 💪 We also provided an one-click installer for Windows users, checkout the details here.

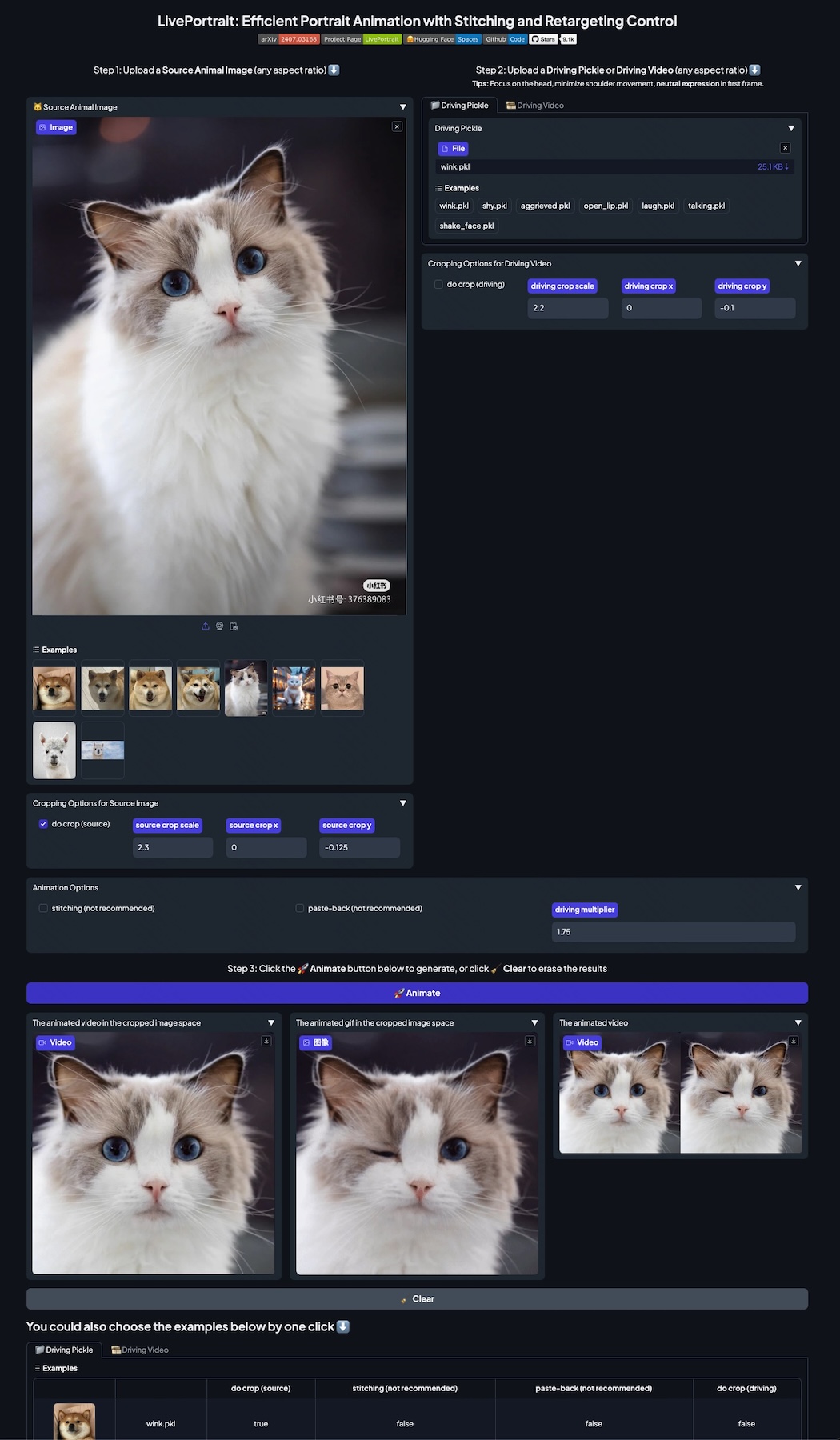

Updates on Animals mode

We are pleased to announce the release of the animals mode, which is fine-tuned on approximately 230K frames of various animals (mostly cats and dogs). The trained weights have been updated in the liveportrait_animals subdirectory, available on HuggingFace or Google Drive. You should download the weights before running. There are two ways to run this mode.

Please note that we have not trained the stitching and retargeting modules for the animals model due to several technical issues. This may be addressed in future updates. Therefore, we recommend disabling stitching by setting the

--no_flag_stitchingoption when running the model. Additionally,paste-backis also not recommended.

Install X-Pose

We have chosen X-Pose as the keypoints detector for animals. This relies on transformers==4.22.0 and pillow>=10.2.0 (which are already updated in requirements.txt) and requires building an OP named MultiScaleDeformableAttention.

Refer to the PyTorch installation for Linux and Windows users.

Next, build the OP MultiScaleDeformableAttention by running:

cd src/utils/dependencies/XPose/models/UniPose/ops

python setup.py build install

cd - # this returns to the previous directory

To run the model, use the inference_animals.py script:

python inference_animals.py -s assets/examples/source/s39.jpg -d assets/examples/driving/wink.pkl --no_flag_stitching --driving_multiplier 1.75

Alternatively, you can use Gradio for a more user-friendly interface. Launch it with:

python app_animals.py # --server_port 8889 --server_name "0.0.0.0" --share

Warning

X-Pose is only for Non-commercial Scientific Research Purposes, you should remove and replace it with other detectors if you use it for commercial purposes.

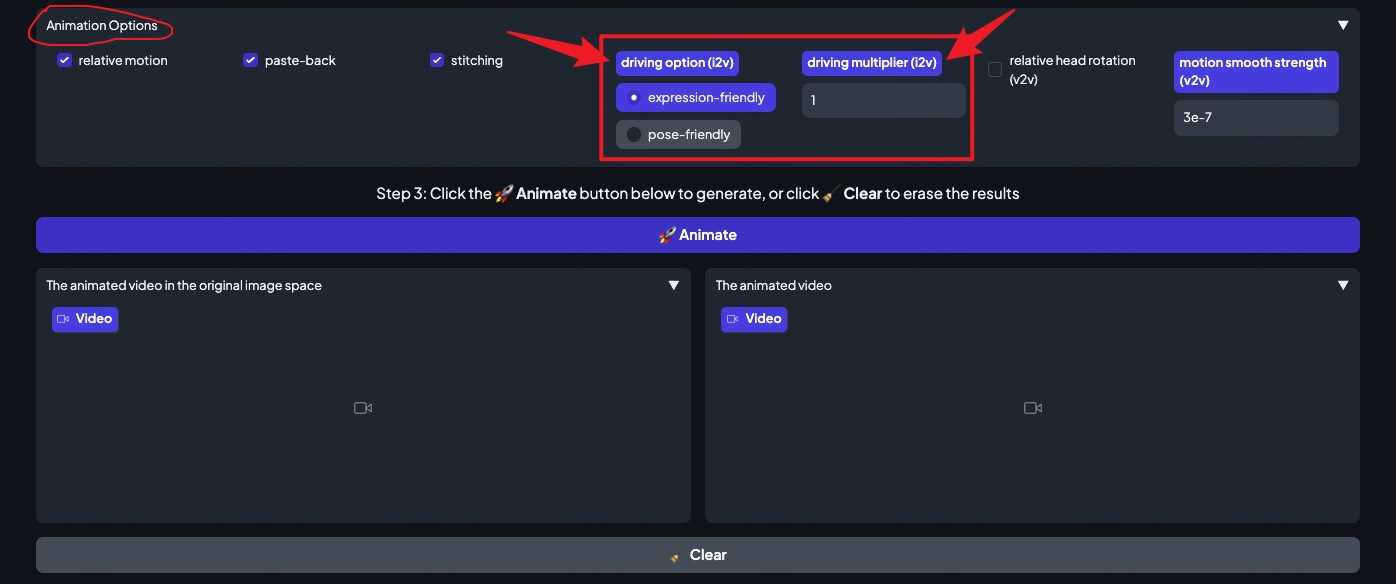

Updates on Humans mode

-

Driving Options: We have introduced an

expression-friendlydriving option to reduce head wobbling, now set as the default. While it may be less effective with large head poses, you can also select thepose-friendlyoption, which is the same as the previous version. This can be set using--driving_optionor selected in the Gradio interface. Additionally, we added a--driving_multiplieroption to adjust driving intensity, with a default value of 1, which can also be set in the Gradio interface. -

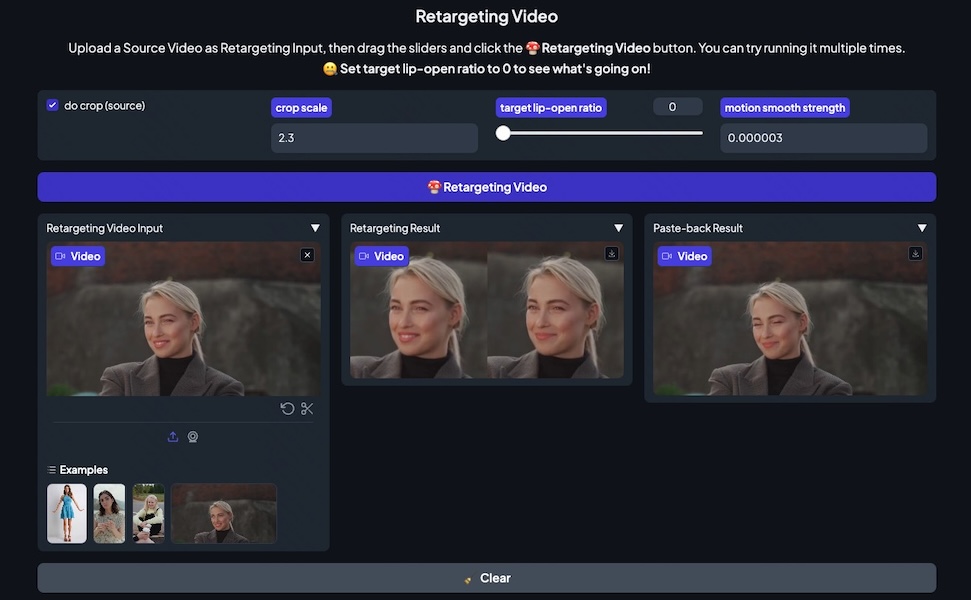

Retargeting Video in Gradio: We have implemented a video retargeting feature. You can specify a

target lip-open ratioto adjust the mouth movement in the source video. For instance, setting it to 0 will close the mouth in the source video 🤐.

Others

- Poe supports LivePortrait. Check out the news on X.

- ComfyUI-LivePortraitKJ (1.1K 🌟) now includes MediaPipe as an alternative to InsightFace, ensuring the license remains under MIT and Apache 2.0.

- ComfyUI-AdvancedLivePortrait features real-time portrait pose/expression editing and animation, and is registered with ComfyUI-Manager.

Below are some screenshots of the new features and improvements:

|

|---|

| The Gradio Interface of Animals Mode |

|

|---|

| Driving Options and Multiplier |

|

|---|

| The Feature of Retargeting Video |