mirror of

https://github.com/k4yt3x/video2x.git

synced 2024-12-27 14:39:09 +00:00

docs(book): added the docs.video2x.org mdBook source files and pipeline

Signed-off-by: k4yt3x <i@k4yt3x.com>

This commit is contained in:

parent

a77cf9e14f

commit

149cf1ca4a

46

.github/workflows/docs.yml

vendored

Normal file

46

.github/workflows/docs.yml

vendored

Normal file

@ -0,0 +1,46 @@

|

||||

name: Docs

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: ["master"]

|

||||

workflow_dispatch:

|

||||

|

||||

permissions:

|

||||

contents: read

|

||||

pages: write

|

||||

id-token: write

|

||||

|

||||

concurrency:

|

||||

group: "pages"

|

||||

cancel-in-progress: false

|

||||

|

||||

jobs:

|

||||

deploy:

|

||||

environment:

|

||||

name: github-pages

|

||||

url: ${{ steps.deployment.outputs.page_url }}

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Install mdBook

|

||||

run: |

|

||||

version="$(curl https://api.github.com/repos/rust-lang/mdBook/releases/latest | jq -r '.tag_name')"

|

||||

curl -sSL "https://github.com/rust-lang/mdBook/releases/download/$version/mdbook-$version-x86_64-unknown-linux-musl.tar.gz" | tar -xz

|

||||

sudo mv mdbook /usr/local/bin/

|

||||

|

||||

- name: Build Docs with mdBook

|

||||

run: mdbook build -d "$PWD/build/docs/book" docs/book

|

||||

|

||||

- name: Setup Pages

|

||||

uses: actions/configure-pages@v5

|

||||

|

||||

- name: Upload artifact

|

||||

uses: actions/upload-pages-artifact@v3

|

||||

with:

|

||||

path: "build/docs/book"

|

||||

|

||||

- name: Deploy to GitHub Pages

|

||||

id: deployment

|

||||

uses: actions/deploy-pages@v4

|

||||

1

docs/book/.gitignore

vendored

Normal file

1

docs/book/.gitignore

vendored

Normal file

@ -0,0 +1 @@

|

||||

book

|

||||

11

docs/book/book.toml

Normal file

11

docs/book/book.toml

Normal file

@ -0,0 +1,11 @@

|

||||

[book]

|

||||

authors = ["k4yt3x"]

|

||||

language = "en"

|

||||

multilingual = false

|

||||

title = "Video2X Documentations"

|

||||

|

||||

[output.html]

|

||||

default-theme = "ayu"

|

||||

preferred-dark-theme = "ayu"

|

||||

git-repository-url = "https://github.com/k4yt3x/video2x"

|

||||

edit-url-template = "https://github.com/k4yt3x/video2x/edit/master/docs/guide/{path}"

|

||||

13

docs/book/src/README.md

Normal file

13

docs/book/src/README.md

Normal file

@ -0,0 +1,13 @@

|

||||

# Introduction

|

||||

|

||||

<p align="center">

|

||||

<img src="https://github.com/user-attachments/assets/5cd63373-e806-474f-94ec-6e04963bf90f"/>

|

||||

</p>

|

||||

|

||||

This site hosts the documentations for the Video2X project, a machine learning-based lossless video super resolution & frame interpolation framework.

|

||||

|

||||

The project's homepage is located on GitHub at: [https://github.com/k4yt3x/video2x](https://github.com/k4yt3x/video2x).

|

||||

|

||||

If you have any questions, suggestions, or found any issues in the documentations, please [open an issue](https://github.com/k4yt3x/video2x/issues/new/choose) on GitHub.

|

||||

|

||||

> 🚧 Some pages are still under construction.

|

||||

35

docs/book/src/SUMMARY.md

Normal file

35

docs/book/src/SUMMARY.md

Normal file

@ -0,0 +1,35 @@

|

||||

# Summary

|

||||

|

||||

[Introduction](README.md)

|

||||

|

||||

# Building

|

||||

|

||||

- [Building](building/README.md)

|

||||

- [Windows](building/windows.md)

|

||||

- [Windows (Qt6)](building/windows-qt6.md)

|

||||

- [Linux](building/linux.md)

|

||||

|

||||

# Installing

|

||||

|

||||

- [Installing](installing/README.md)

|

||||

- [Windows (Command Line)](installing/windows.md)

|

||||

- [Windows (Qt6 GUI)](installing/windows-qt6.md)

|

||||

- [Linux](installing/linux.md)

|

||||

|

||||

# Running

|

||||

|

||||

- [Running](running/README.md)

|

||||

- [Desktop](running/desktop.md)]

|

||||

- [Command Line](running/command-line.md)

|

||||

- [Container](running/container.md)

|

||||

|

||||

# Developing

|

||||

|

||||

- [Developing](developing/README.md)

|

||||

- [Architecture](developing/architecture.md)

|

||||

- [libvideo2x](developing/libvideo2x.md)

|

||||

|

||||

# Other

|

||||

|

||||

- [Other](other/README.md)

|

||||

- [History](other/history.md)

|

||||

3

docs/book/src/building/README.md

Normal file

3

docs/book/src/building/README.md

Normal file

@ -0,0 +1,3 @@

|

||||

# Building

|

||||

|

||||

Instructions for building the project.

|

||||

50

docs/book/src/building/linux.md

Normal file

50

docs/book/src/building/linux.md

Normal file

@ -0,0 +1,50 @@

|

||||

# Linux

|

||||

|

||||

Instructions for building this project on Linux.

|

||||

|

||||

## Arch Linux

|

||||

|

||||

Arch users can build the latest version of the project from the AUR package `video2x-git`. The project's repository also contains another PKGBUILD example at `packaging/arch/PKGBUILD`.

|

||||

|

||||

```bash

|

||||

# Build only

|

||||

git clone https://aur.archlinux.org/video2x-git.git

|

||||

cd video2x-git

|

||||

makepkg -s

|

||||

```

|

||||

|

||||

To build manually from the source, follow the instructions below.

|

||||

|

||||

```bash

|

||||

# Install build and runtime dependencies

|

||||

# See the PKGBUILD file for the list of up-to-date dependencies

|

||||

pacman -Sy ffmpeg ncnn vulkan-driver opencv spdlog boost-libs

|

||||

pacman -Sy git cmake make clang pkgconf vulkan-headers openmp boost

|

||||

|

||||

# Clone the repository

|

||||

git clone --recurse-submodules https://github.com/k4yt3x/video2x.git

|

||||

cd video2x

|

||||

|

||||

# Build the project

|

||||

make build

|

||||

```

|

||||

|

||||

The built binaries will be located in the `build` directory.

|

||||

|

||||

## Ubuntu

|

||||

|

||||

Ubuntu users can use the `Makefile` to build the project automatically. The `ubuntu2404` and `ubuntu2204` targets are available for Ubuntu 24.04 and 22.04, respectively. `make` will automatically install the required dependencies, build the project, and package it into a `.deb` package file. It is recommended to perform the build in a container to ensure the environment's consistency and to avoid leaving extra build packages on your system.

|

||||

|

||||

```bash

|

||||

# make needs to be installed manually

|

||||

sudo apt-get update && sudo apt-get install make

|

||||

|

||||

# Clone the repository

|

||||

git clone --recurse-submodules https://github.com/k4yt3x/video2x.git

|

||||

cd video2x

|

||||

|

||||

# Build the project

|

||||

make ubuntu2404

|

||||

```

|

||||

|

||||

The built `.deb` package will be located under the current directory.

|

||||

55

docs/book/src/building/windows-qt6.md

Normal file

55

docs/book/src/building/windows-qt6.md

Normal file

@ -0,0 +1,55 @@

|

||||

# Windows (Qt6)

|

||||

|

||||

Instructions for building the Qt6 GUI of this project on Windows.

|

||||

|

||||

## 1. Prerequisites

|

||||

|

||||

These dependencies must be installed before building the project. This tutorial assumes that Qt6 has been installed to the default location (`C:\Qt`).

|

||||

|

||||

- [Visual Studio 2022](https://visualstudio.microsoft.com/vs/)

|

||||

- Workload: Desktop development with C++

|

||||

- [winget-cli](https://github.com/microsoft/winget-cli)

|

||||

- [Qt6](https://www.qt.io/download)

|

||||

- Component: Qt6 with MSVC 2022 64-bit

|

||||

- Component: Qt Creator

|

||||

|

||||

## 1. Clone the Repository

|

||||

|

||||

```bash

|

||||

# Install Git if not already installed

|

||||

winget install -e --id=Git.Git

|

||||

|

||||

# Clone the repository

|

||||

git clone --recurse-submodules https://github.com/k4yt3x/video2x-qt6.git

|

||||

cd video2x-qt6

|

||||

```

|

||||

|

||||

## 2. Install Dependencies

|

||||

|

||||

You need to have the `libvideo2x` shared library built before building the Qt6 GUI. Put the built binaries in `third_party/libvideo2x-shared`.

|

||||

|

||||

```bash

|

||||

# Versions of manually installed dependencies

|

||||

$ffmpegVersion = "7.1"

|

||||

|

||||

# Download and extract FFmpeg

|

||||

curl -Lo ffmpeg-shared.zip "https://github.com/GyanD/codexffmpeg/releases/download/$ffmpegVersion/ffmpeg-$ffmpegVersion-full_build-shared.zip"

|

||||

Expand-Archive -Path ffmpeg-shared.zip -DestinationPath third_party

|

||||

Rename-Item -Path "third_party/ffmpeg-$ffmpegVersion-full_build-shared" -NewName ffmpeg-shared

|

||||

```

|

||||

|

||||

## 3. Build the Project

|

||||

|

||||

1. Open the `CMakeLists.txt` file in Qt Creator as the project file.

|

||||

2. Click on the hammer icon at the bottom left of the window to build the project.

|

||||

3. Built binaries will be located in the `build` directory.

|

||||

|

||||

After the build finishes, you will need to copy the Qt6 DLLs and other dependencies to the build directory to run the application. Before you run the following commands, remove everything in the release directory except for `video2x-qt6.exe` and the `.qm` files as they are not required for running the application. Then, run the following command to copy the Qt6 runtime DLLs:

|

||||

|

||||

```bash

|

||||

C:\Qt\6.8.0\msvc2022_64\bin\windeployqt.exe --release --compiler-runtime .\build\Desktop_Qt_6_8_0_MSVC2022_64bit-Release\video2x-qt6.exe

|

||||

```

|

||||

|

||||

You will also need to copy the `libvideo2x` shared library to the build directory. Copy all files under `third_party/libvideo2x-shared` to the release directory except for `include`, `libvideo2x.lib`, and `video2x.exe`.

|

||||

|

||||

Now you should be able to run the application by double-clicking on `video2x-qt6.exe`.

|

||||

56

docs/book/src/building/windows.md

Normal file

56

docs/book/src/building/windows.md

Normal file

@ -0,0 +1,56 @@

|

||||

# Windows

|

||||

|

||||

Instructions for building this project on Windows.

|

||||

|

||||

## 1. Prerequisites

|

||||

|

||||

The following tools must be installed manually:

|

||||

|

||||

- [Visual Studio 2022](https://visualstudio.microsoft.com/vs/)

|

||||

- Workload: Desktop development with C++

|

||||

- [winget-cli](https://github.com/microsoft/winget-cli)

|

||||

|

||||

## 2. Clone the Repository

|

||||

|

||||

```bash

|

||||

# Install Git if not already installed

|

||||

winget install -e --id=Git.Git

|

||||

|

||||

# Clone the repository

|

||||

git clone --recurse-submodules https://github.com/k4yt3x/video2x.git

|

||||

cd video2x

|

||||

```

|

||||

|

||||

## 3. Install Dependencies

|

||||

|

||||

```bash

|

||||

# Install CMake

|

||||

winget install -e --id=Kitware.CMake

|

||||

|

||||

# Install Vulkan SDK

|

||||

winget install -e --id=KhronosGroup.VulkanSDK

|

||||

|

||||

# Versions of manually installed dependencies

|

||||

$ffmpegVersion = "7.1"

|

||||

$ncnnVersion = "20240820"

|

||||

|

||||

# Download and extract FFmpeg

|

||||

curl -Lo ffmpeg-shared.zip "https://github.com/GyanD/codexffmpeg/releases/download/$ffmpegVersion/ffmpeg-$ffmpegVersion-full_build-shared.zip"

|

||||

Expand-Archive -Path ffmpeg-shared.zip -DestinationPath third_party

|

||||

Rename-Item -Path "third_party/ffmpeg-$ffmpegVersion-full_build-shared" -NewName ffmpeg-shared

|

||||

|

||||

# Download and extract ncnn

|

||||

curl -Lo ncnn-shared.zip "https://github.com/Tencent/ncnn/releases/download/$ncnnVersion/ncnn-$ncnnVersion-windows-vs2022-shared.zip"

|

||||

Expand-Archive -Path ncnn-shared.zip -DestinationPath third_party

|

||||

Rename-Item -Path "third_party/ncnn-$ncnnVersion-windows-vs2022-shared" -NewName ncnn-shared

|

||||

```

|

||||

|

||||

## 4. Build the Project

|

||||

|

||||

```bash

|

||||

cmake -S . -B build -DUSE_SYSTEM_NCNN=OFF -DUSE_SYSTEM_SPDLOG=OFF -DUSE_SYSTEM_BOOST=OFF `

|

||||

-DCMAKE_BUILD_TYPE=Release -DCMAKE_INSTALL_PREFIX=build/libvideo2x-shared

|

||||

cmake --build build --config Release --parallel --target install

|

||||

```

|

||||

|

||||

The built binaries will be located in `build/libvideo2x-shared`.

|

||||

3

docs/book/src/developing/README.md

Normal file

3

docs/book/src/developing/README.md

Normal file

@ -0,0 +1,3 @@

|

||||

# Developing

|

||||

|

||||

Development-related instructions and guidelines for this project.

|

||||

38

docs/book/src/developing/architecture.md

Normal file

38

docs/book/src/developing/architecture.md

Normal file

@ -0,0 +1,38 @@

|

||||

# Architecture

|

||||

|

||||

The basic working principals of Video2X and its historical architectures.

|

||||

|

||||

## Video2X <=4.0.0 (Legacy)

|

||||

|

||||

Below is the earliest architecture of Video2X. It extracts all of the frames from the video using FFmpeg, processes all frames, and stores them into a folder before running FFmpeg again to convert all of the frames back into a video. The drawbacks of this approach are apparent:

|

||||

|

||||

- Storing all frames of the video on disk twice requires a huge amount of storage, often hundreds of gigabytes.

|

||||

- A lot of disk I/O (reading from/writing to disks) operations occur, which is inefficient. Each step stores its processing results to disk, and the next step has to read them from disk again.

|

||||

|

||||

\

|

||||

_Video2X architecture before version 5.0.0_

|

||||

|

||||

## Video2X 5.0.0 (Legacy)

|

||||

|

||||

Video2X 5.0.0's architecture was designed to address the inefficient disk I/O issues. This version uses frame serving and streamlines the process. All stages are started simultaneously, and frames are passed between stages through stdin/stdout pipes. However, this architecture also has several issues:

|

||||

|

||||

- At least two instances of FFmpeg will be started, three in the case of Anime4K.

|

||||

- Passing frames through stdin/stdout is unstable. If frame sizes are incorrect, FFmpeg will hang waiting for the next frame.

|

||||

- The frames entering and leaving each stage must be RGB24, even if they don't need to be. For instance, if the upscaler used is Anime4K, yuv420p is acceptable, but the frame is first converted by the decoder to RGB24, then converted back into YUV colorspace for libplacebo.

|

||||

|

||||

\

|

||||

_Video2X 5.x.x architecture_

|

||||

|

||||

## Video2X 6.0.0 (Current)

|

||||

|

||||

Video2X 6.0.0 (Current)

|

||||

|

||||

The newest version of Video2X's architecture addresses the issues of the previous architecture while improving efficiency.

|

||||

|

||||

- Frames are only decoded once and encoded once with FFmpeg's libavformat.

|

||||

- Frames are passed as `AVFrame` structs. Their pixel formats are only converted when needed.

|

||||

- Frames always stay in RAM, avoiding bottlenecks from disk I/O and pipes.

|

||||

- Frames always stay in the hardware (GPU) unless they need to be downloaded to be processed by software (partially implemented).

|

||||

|

||||

\

|

||||

_Video2X 6.0.0 architecture_

|

||||

5

docs/book/src/developing/libvideo2x.md

Normal file

5

docs/book/src/developing/libvideo2x.md

Normal file

@ -0,0 +1,5 @@

|

||||

# libvideo2x

|

||||

|

||||

Instructions for using libvideo2x's C API in your own projects.

|

||||

|

||||

libvideo2x's API is still highly volatile. This document will be updated as the API stabilizes.

|

||||

3

docs/book/src/installing/README.md

Normal file

3

docs/book/src/installing/README.md

Normal file

@ -0,0 +1,3 @@

|

||||

# Installing

|

||||

|

||||

Instructions for installing this project.

|

||||

19

docs/book/src/installing/linux.md

Normal file

19

docs/book/src/installing/linux.md

Normal file

@ -0,0 +1,19 @@

|

||||

# Linux

|

||||

|

||||

Instructions for installing this project on Linux systems.

|

||||

|

||||

## Arch Linux

|

||||

|

||||

Arch users can install the project from the AUR.

|

||||

|

||||

```bash

|

||||

yay -S video2x-git

|

||||

```

|

||||

|

||||

## Ubuntu

|

||||

|

||||

Ubuntu users can download the `.deb` packages from the [releases page](https://github.com/k4yt3x/video2x/releases/latest). Install the package with the APT package manager:

|

||||

|

||||

```bash

|

||||

apt-get install ./video2x-linux-ubuntu2404-amd64.deb

|

||||

```

|

||||

7

docs/book/src/installing/windows-qt6.md

Normal file

7

docs/book/src/installing/windows-qt6.md

Normal file

@ -0,0 +1,7 @@

|

||||

# Windows (Qt6)

|

||||

|

||||

You can download the installer for Video2X Qt6 from the [releases page](https://github.com/k4yt3x/video2x/releases/latest). The installer file's name is `video2x-qt6-windows-amd64-installer.exe`.

|

||||

|

||||

Download then double-click the installer to start the installation process. The installer will guide you through the installation process. You can choose the installation directory and whether to create a desktop shortcut during the installation.

|

||||

|

||||

After the installation is complete, you can start Video2X Qt6 by double-clicking the desktop shortcut.

|

||||

12

docs/book/src/installing/windows.md

Normal file

12

docs/book/src/installing/windows.md

Normal file

@ -0,0 +1,12 @@

|

||||

# Windows

|

||||

|

||||

You can download the latest version of the Windows build from the [releases page](https://github.com/k4yt3x/video2x/releases/latest). Here are the steps to download and install the pre-built binaries to `%LOCALAPPDATA%\Programs`.

|

||||

|

||||

```bash

|

||||

$latestTag = (Invoke-RestMethod -Uri https://api.github.com/repos/k4yt3x/video2x/releases/latest).tag_name

|

||||

curl -LO "https://github.com/k4yt3x/video2x/releases/download/$latestTag/video2x-windows-amd64.zip"

|

||||

New-Item -Path "$env:LOCALAPPDATA\Programs\video2x" -ItemType Directory -Force

|

||||

Expand-Archive -Path .\video2x-windows-amd64.zip -DestinationPath "$env:LOCALAPPDATA\Programs\video2x"

|

||||

```

|

||||

|

||||

You can then add `%LOCALAPPDATA%\Programs\video2x` to your `PATH` environment variable to run `video2x` from the command line.

|

||||

1

docs/book/src/other/README.md

Normal file

1

docs/book/src/other/README.md

Normal file

@ -0,0 +1 @@

|

||||

# Other

|

||||

47

docs/book/src/other/history.md

Normal file

47

docs/book/src/other/history.md

Normal file

@ -0,0 +1,47 @@

|

||||

# History

|

||||

|

||||

Video2X came a long way from its original concepts to what it has become today. It started as a simple concept of "waifu2x can upscale images, and a video is just a sequence of images". Then, a PoC was made which can barely upscale a single video with waifu2x-caffe and with fixed settings. Now, Video2X has become a comprehensive and customizable video upscaling tool with a nice GUI and a community around it. This article documents in detail how Video2X's concept was born, and what happened during its development.

|

||||

|

||||

## Origin

|

||||

|

||||

The story started with me watching Bad Apple!!'s PV in early 2017. The original PV has a size of `512x384`, which is quite small and thus, quite blurry.

|

||||

|

||||

\

|

||||

_A screenshot of the original Bad Apple!! PV_

|

||||

|

||||

Around the same time, I was introduced to this amazing project named waifu2x, which upscales (mostly anime) images using machine learning. This created a spark in my head: **if images can be upscaled, aren't videos just a sequence of images?** Then, I started making a proof-of-concept by manually extracting all frames from the original PV using FFmpeg, putting them through waifu2x-caffe, and assembling the frames back into a video again using FFmpeg. This was how the ["4K BadApple!! waifu2x Lossless Upscaled"](https://www.youtube.com/watch?v=FiX7ygnbAHw) video was created.

|

||||

|

||||

\

|

||||

_Thumbnail of the "4K BadApple!! waifu2x Lossless Upscaled" video_

|

||||

|

||||

After this experiment completed successfully, I started thinking about making an automation pipeline, where this manual process will be streamlined, and each of the steps will be handled automatically.

|

||||

|

||||

## Proof-of-Concept

|

||||

|

||||

When I signed up for Hack the Valley II in late 2017, I didn't know what I was going to make during that hackathon. Our team sat down and thought about what to make for around an hour, but no one came up with anything interesting. All of a sudden, I remembered, "Hey, isn't there a PoC I wanted to make? How about making that our hackathon project?" I then temporarily name the project Video2X, following waifu2x's scheme. Video2X was then born.

|

||||

|

||||

I originally wanted to write Video2X for Linux, but it's too complicated to get the original [nagadomi/waifu2x](https://github.com/nagadomi/waifu2x)'s version of waifu2x running, so waifu2x-caffe written for Windows was used to save time. This is why the first version of Video2X only supports Windows, and can only use waifu2x-caffe as its upscaling driver.

|

||||

|

||||

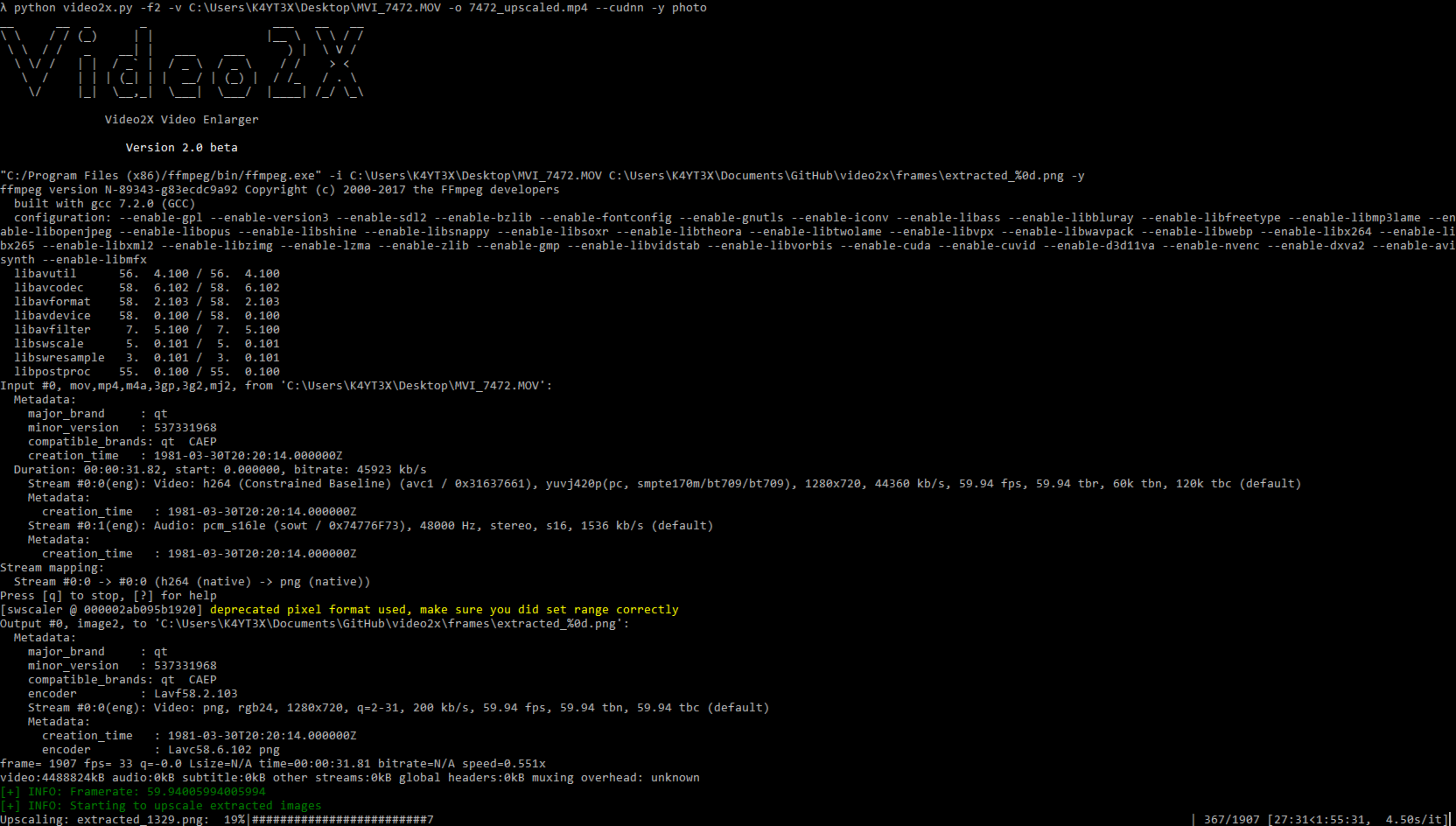

\

|

||||

_video2x.py file in the first version of Video2X_

|

||||

|

||||

At the end of the hackathon, we managed to make a [sample comparison video](https://www.youtube.com/watch?v=mGEfasQl2Zo) based on [Spirited Away's official trailer](https://www.youtube.com/watch?v=ByXuk9QqQkk). This video was then published on YouTube and is the same demo video showcased in Video2X's repository. The original link was at [https://www.youtube.com/watch?v=PG94iPoeoZk](https://www.youtube.com/watch?v=PG94iPoeoZk), but it has been moved lately to another account under K4YT3X's name.

|

||||

|

||||

\

|

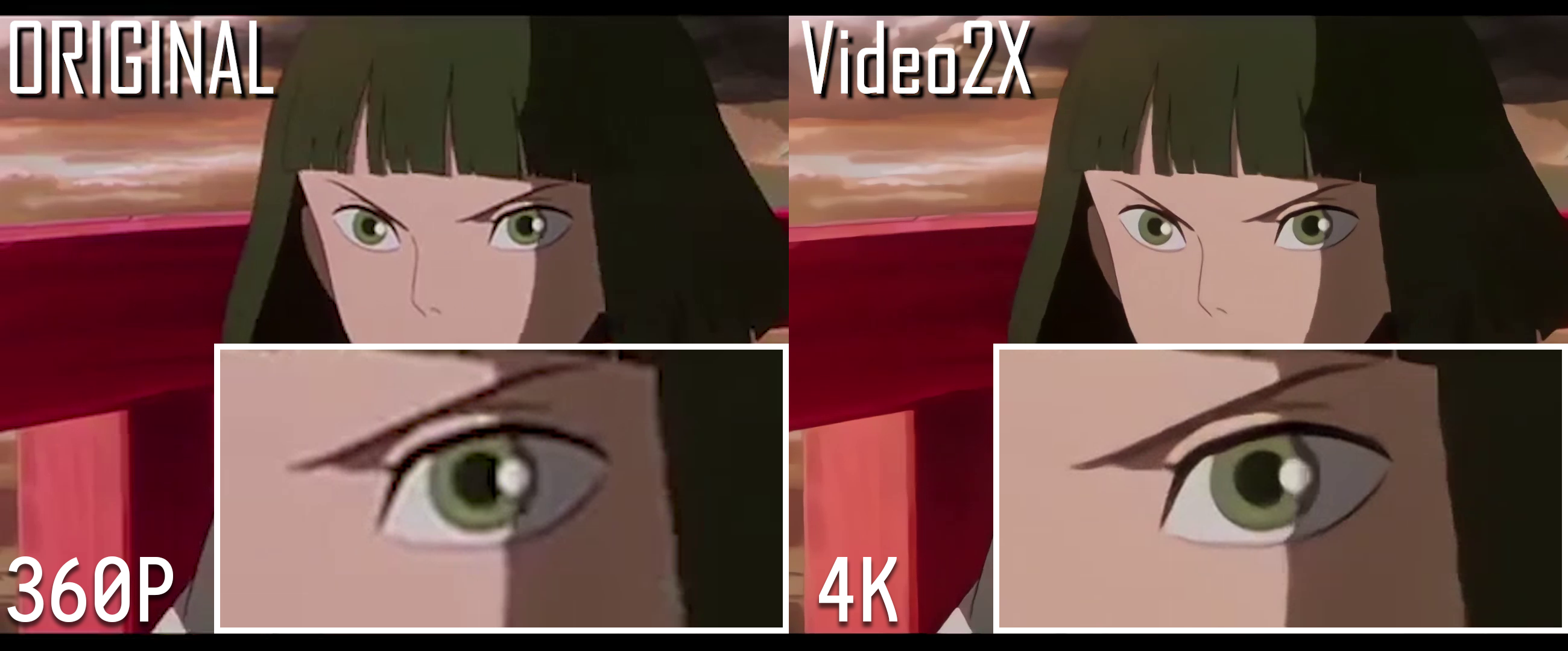

||||

_Upscale Comparison Demonstration_

|

||||

|

||||

When we demoed this project, there wasn't so much interest expressed by the judges. We were, however, suggested to pitch our project to Adobe. That didn't end up going anywhere, either. Like most of the other projects in a hackathon, this project didn't win any awards, and just almost vanished after the hackathon was over.

|

||||

|

||||

<!--\-->

|

||||

|

||||

_[Image Removed]_\

|

||||

_Our team in Hack the Valley II. You can see Video2X's demo video on the computer screens. Image blurred for privacy._

|

||||

|

||||

## Video2X 2.0

|

||||

|

||||

Roughly three months after the hackathon, I came back to this project and decided it was worth continuing. Although not many people in the hackathon found this project interesting or useful, I saw value in this project. This was further reinforced by the stars I've received in the project's repository.

|

||||

|

||||

I continued working on enhancing Video2X and fixing bugs, and Video2X 2.0 was released. The original version of Video2X was only made as a proof-of-concept for the hackathon. A lot of the usability and convenience aspects are ignored in exchange for development speed. The 2.0 version addressed a lot of these issues and made Video2X usable for regular users. Video2X has then also been converted from a hackathon project to a personal open-source project.

|

||||

|

||||

\

|

||||

_Screenshot of Video2X 2.0_

|

||||

3

docs/book/src/running/README.md

Normal file

3

docs/book/src/running/README.md

Normal file

@ -0,0 +1,3 @@

|

||||

# Running

|

||||

|

||||

Instructions for running and using this project.

|

||||

49

docs/book/src/running/command-line.md

Normal file

49

docs/book/src/running/command-line.md

Normal file

@ -0,0 +1,49 @@

|

||||

# Command Line

|

||||

|

||||

Instructions for running Video2X from the command line.

|

||||

|

||||

This page does not cover all the options available. For help with more options available, run Video2X with the `--help` argument.

|

||||

|

||||

## Basics

|

||||

|

||||

Use the following command to upscale a video by 4x with RealESRGAN:

|

||||

|

||||

```bash

|

||||

video2x -i input.mp4 -o output.mp4 -f realesrgan -r 4 -m realesr-animevideov3

|

||||

```

|

||||

|

||||

Use the following command to upscale a video to with libplacebo + Anime4Kv4 Mode A+A:

|

||||

|

||||

```bash

|

||||

video2x -i input.mp4 -o output.mp4 -f libplacebo -s anime4k-v4-a+a -w 3840 -h 2160

|

||||

```

|

||||

|

||||

## Advanced

|

||||

|

||||

It is possible to specify custom MPV-compatible GLSL shader files with the `--shader, -s` argument:

|

||||

|

||||

```bash

|

||||

video2x -i input.mp4 -o output.mp4 -f libplacebo -s path/to/custom/shader.glsl -w 3840 -h 2160

|

||||

```

|

||||

|

||||

List the available GPUs with `--list-gpus, -l`:

|

||||

|

||||

```bash

|

||||

$video2x --list-gpus

|

||||

0. NVIDIA RTX A6000

|

||||

Type: Discrete GPU

|

||||

Vulkan API Version: 1.3.289

|

||||

Driver Version: 565.228.64

|

||||

```

|

||||

|

||||

Select which GPU to use with the `--gpu, -g` argument:

|

||||

|

||||

```bash

|

||||

video2x -i input.mp4 -o output.mp4 -f realesrgan -r 4 -m realesr-animevideov3 -g 1

|

||||

```

|

||||

|

||||

Specify arbitrary extra FFmepg encoder options with the `--extra-encoder-options, -e` argument:

|

||||

|

||||

```bash

|

||||

video2x -i input.mkv -o output.mkv -f realesrgan -m realesrgan-plus -r 4 -c libx264rgb -e crf=17 -e preset=veryslow -e tune=film

|

||||

```

|

||||

57

docs/book/src/running/container.md

Normal file

57

docs/book/src/running/container.md

Normal file

@ -0,0 +1,57 @@

|

||||

# Container

|

||||

|

||||

Instructions for running the Video2X container.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

- Docker, Podman, or another OCI-compatible runtime

|

||||

- A GPU that supports the Vulkan API

|

||||

- Check the [Vulkan Hardware Database](https://vulkan.gpuinfo.org/) to see if your GPU supports Vulkan

|

||||

|

||||

## Upscaling a Video

|

||||

|

||||

This section documents how to upscale a video. Replace `$TAG` with an appropriate container tag. A list of available tags can be found [here](https://github.com/k4yt3x/video2x/pkgs/container/video2x) (e.g., `6.1.1`).

|

||||

|

||||

### AMD GPUs

|

||||

|

||||

Make sure your host has the proper GPU and Vulkan libraries and drivers, then use the following command to launch the container:

|

||||

|

||||

```shell

|

||||

docker run --gpus all -it --rm -v $PWD/data:/host ghcr.io/k4yt3x/video2x:$TAG -i standard-test.mp4 -o output.mp4 -f realesrgan -r 4 -m realesr-animevideov3

|

||||

```

|

||||

|

||||

### NVIDIA GPUs

|

||||

|

||||

In addition to installing the proper drivers on your host, `nvidia-docker2` (NVIDIA Container Toolkit) must also be installed on the host to use NVIDIA GPUs in containers. Below are instructions for how to install it on some popular Linux distributions:

|

||||

|

||||

- Debian/Ubuntu

|

||||

- Follow the [official guide](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#setting-up-nvidia-container-toolkit) to install `nvidia-docker2`

|

||||

- Arch/Manjaro

|

||||

- Install `nvidia-container-toolkit` from the AUR

|

||||

- E.g., `yay -S nvidia-container-toolkit`

|

||||

|

||||

Once all the prerequisites are installed, you can launch the container:

|

||||

|

||||

```shell

|

||||

docker run --gpus all -it --rm -v $PWD:/host ghcr.io/k4yt3x/video2x:$TAG -i standard-test.mp4 -o output.mp4 -f realesrgan -r 4 -m realesr-animevideov3

|

||||

```

|

||||

|

||||

Depending on the version of your nvidia-docker and some other mysterious factors, you can also try setting `no-cgroups = true` in `/etc/nvidia-container-runtime/config.toml` and adding the NVIDIA devices into the container if the command above doesn't work:

|

||||

|

||||

```shell

|

||||

docker run --gpus all --device=/dev/nvidia0 --device=/dev/nvidiactl --runtime nvidia -it --rm -v $PWD:/host ghcr.io/k4yt3x/video2x:$TAG -i standard-test.mp4 -o output.mp4 -f realesrgan -r 4 -m realesr-animevideov3

|

||||

```

|

||||

|

||||

If you are still getting a `vkEnumeratePhysicalDevices failed -3` error at this point, try adding the `--privileged` flag to give the container the same level of permissions as the host:

|

||||

|

||||

```shell

|

||||

docker run --gpus all --privileged -it --rm -v $PWD:/host ghcr.io/k4yt3x/video2x:$TAG -i standard-test.mp4 -o output.mp4 -f realesrgan -r 4 -m realesr-animevideov3

|

||||

```

|

||||

|

||||

### Intel GPUs

|

||||

|

||||

Similar to NVIDIA GPUs, you can add `--gpus all` or `--device /dev/dri` to pass the GPU into the container. Adding `--privileged` might help with the performance (thanks @NukeninDark).

|

||||

|

||||

```shell

|

||||

docker run --gpus all --privileged -it --rm -v $PWD:/host ghcr.io/k4yt3x/video2x:$TAG -i standard-test.mp4 -o output.mp4 -f realesrgan -r 4 -m realesr-animevideov3

|

||||

```

|

||||

3

docs/book/src/running/desktop.md

Normal file

3

docs/book/src/running/desktop.md

Normal file

@ -0,0 +1,3 @@

|

||||

# Desktop

|

||||

|

||||

TODO.

|

||||

Loading…

Reference in New Issue

Block a user